TABLE OF CONTENTS

Quick access to accurate product data is critical to hardware engineering. Every decision, from design iterations to supplier selection, relies on knowing precisely what’s in the system: which parts are approved, which assemblies are under revision, what lead times and costs look like, and whether the data is up to date. When that information is hard to find, progress stalls, errors creep in, and teams start working from assumptions instead of facts.

Yet in most PLM (Product Lifecycle Management) systems, the search function is constrained by rigid filters, static views, and field-specific queries. Users need to memorize field names or learn SQL (Structured Query Language) just to perform routine lookups. If you don’t know how the data is structured or which metadata field to search, you’re out of luck.

This extra work adds up, creating unnecessary friction in daily workflows like reviewing parts, checking revision status, and validating supplier information. Time spent navigating product hierarchies, filtering BOMs, or requesting reports from system admins can easily consume hours each week – time that would be better spent on design, sourcing, and decision-making.

Natural language search offers a different model. Users can describe what they’re looking for in plain language, and the system handles the translation. That means no special syntax, no workaround reports, and no digging through admin settings.

Traditional PLM Search Slows Teams Down

Accessing product data should be fast and intuitive, but traditional PLM systems bury this data behind rigid filters, inconsistent field naming, and limited query capabilities. Without knowing exactly how the data is structured, engineers are left guessing.

As a result, tracing how a subassembly rolls into a top-level product requires exporting data and reconstructing the hierarchy offline. Searching for all assemblies that include a specific component involves a series of manual clicks, cross-referencing part numbers across views, or submitting a request for a custom report from a system admin.

These slowdowns compound quickly. They interrupt workflows, obscure critical information, and create unnecessary friction between teams. When engineering, sourcing, and operations can’t access the same product structure in real time, decisions get delayed, and mistakes get made.

What Is Natural Language Search?

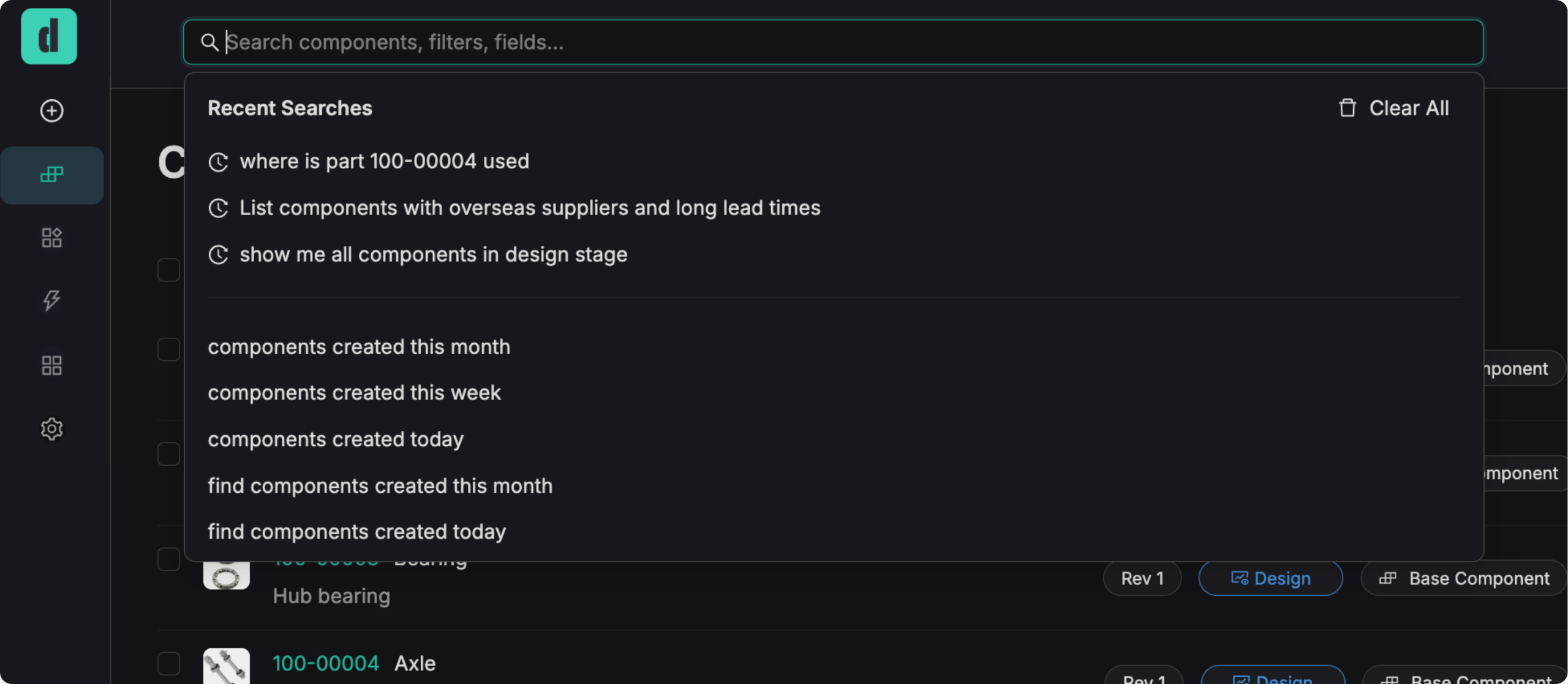

Natural language search replaces rigid query inputs with a flexible, intent-driven interface. Instead of requiring users to know specific field names or database structures, it allows them to describe what they need in plain language and get back precise, relevant results.

This is more than a UI update; it fundamentally changes how users interact with PLM data. A sourcing manager might ask, “Show me all components added in the last 30 days over $500,” while an engineer looks for “assemblies waiting for release approval.” The system parses the request, maps it to the appropriate fields and logic, and returns structured data, so no manual filtering or post-processing is required.

By translating everyday language into structured queries, natural language search removes one of the most significant barriers to data access. It allows engineers, program managers, and supply chain teams to get the information they need, when they need it, without interrupting workflows or escalating to PLM admins.

Natural Language Search Examples

The contrast between traditional search methods and natural language search is most obvious in practice. Here’s how common tasks change when intent drives the query:

Use Case

Traditional PLM Search

Natural Language Search

Find recent high-cost parts

Set multiple filters across cost and date fields, sort manually

“Show parts over $1000 added this quarter”

Track release status of assemblies

Manually check approval fields across records

“List assemblies still waiting for release”

Identify where a component is used

Run multi-level BOM reports, cross-reference part numbers

“Where is part 100-00004 used?”

Flag sourcing risk by supplier type

Export BOM, merge with supplier data, filter manually

“List components with overseas suppliers and long lead times”

Powered by LLMs, Tuned for Engineering Context

Natural language search in Duro Design PLM is built on large language models (LLMs) trained to interpret plain language in the context of structured engineering data.

These models recognize intent even when phrasing is imprecise, and they understand common synonyms across roles – “release” vs. “approve,” “component” vs. “part,” “supplier” vs. “vendor.” They also infer relationships between parts, assemblies, revisions, sourcing metadata, and change orders, allowing users to ask complex, multi-dimensional questions without needing to break them into filterable fields.

Unlike bolt-on AI tools that operate outside the PLM, Duro Design’s natural language engine is built into the system itself. Queries run directly against your live product data, so the results reflect real-time status, not an approximate snapshot.

To prevent miscommunication between what a user asks and what the system returns, Duro Design also shows its interpreted query logic alongside the results. For example, if a user types “Show me assemblies pending release,” they will see Duro Design’s interpretation below the search as Status = ‘Pending’ AND Type = ‘Assembly’.

This feedback loop improves clarity, builds trust, and makes it easier to refine queries on the fly, without needing to escalate to a PLM admin or second-guess the data.

As usage grows, the system learns. It adapts to the terminology, phrasing, and patterns unique to your team, continuously improving precision across roles and workflows.

Business Impact: Real-World Use Cases

Natural language search reduces friction across the product lifecycle, and that friction matters. When engineers can’t access the data they need, decisions stall. When sourcing managers have to chase down part details or lead time information, supplier negotiations lose momentum. When teams operate on outdated or inconsistent information, production slows, and errors multiply.

Natural language search avoids bottlenecks at the source by making product data accessible through plain language. The result is faster decisions, better alignment across functions, and fewer preventable delays.

The business outcomes are tangible:

- Shorter development cycles: Less time spent hunting for the right information means more time acting on it.

- Cross-functional clarity: Engineering, operations, and supply chain teams operate from the same accurate, up-to-date information.

- Onboarding without bottlenecks: New team members can ask real questions and get real answers without learning internal systems or asking for help.

- Reduced risk: Fewer manual workarounds mean fewer mistakes in critical handoffs or release gates.

PLM Built for the Way Modern Teams Work

In agile engineering environments, clarity and speed drive outcomes. The ability to ask a question and immediately access the correct data can be the difference between momentum and delay. That means natural language search is no longer a nice-to-have; it’s a necessary competitive edge.

Duro Design is the first fully configurable, AI-native PLM built for modern hardware teams. Natural language search is embedded directly into the platform—no setup, no training, and no plug-ins are required. It’s designed to be used by anyone, not just system experts, and it returns consistent, trustworthy results that teams can confidently act on.

This is just one aspect of Duro Design’s focus on empowering hardware engineers. With automated validation, sourcing insights, and predictive change analysis built into the platform, Duro Design helps teams reduce friction, make better decisions, and move faster across the entire product lifecycle.